Stream Systems

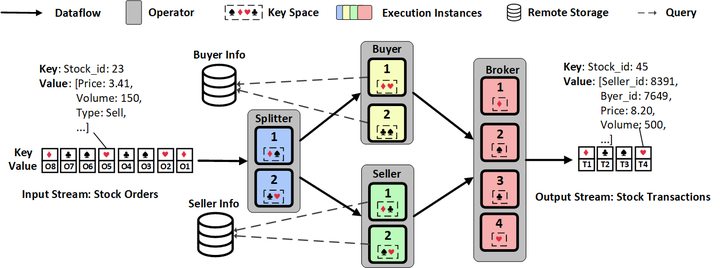

Stream processing is a big data technology that focuses on the real-time processing of continuous streams of data in motion. A stream processing framework simplifies parallel hardware and software by restricting the performance of parallel computation. Pipelined kernel functions are applied to each element in a data stream, employing on-chip memory reuse to minimize loss in bandwidth. Stream processing tools and technologies are available in a variety of formats: distributed publish-subscribe messaging systems such as Kafka, distributed real-time computation systems such as Storm, and streaming data flow engines such as Flink.

Distributed stream processing systems involve the use of geographically distributed architectures for processing large data streams in real time to increase efficiency and reliability of the data ingestion, data processing, and the display of data for analysis. Distributed stream processing can also refer to an organization’s ability to centrally process distributed streams of data originating from various geographically dispersed sources, such as IoT connected devices and cellular data towers. Distributing stream processing hardware and software is a reliable method for developing a more scalable disaster recovery strategy.

Microservices, an architectural design for building distributed applications, allows for each service to scale or update without disrupting other services in the application. Microservices stream processing manages these individual data streams through one central area so systems and IT engineers can monitor health and security of these microservices to ensure an uninterrupted end user experience.